Why Probabilistic Record Linkage Still Matters

- Gandhinath Swaminathan

- 24 hours ago

- 5 min read

Probabilistic record linkage remains essential because identity data is inherently uncertain. Rather than forcing a brittle “yes/no” rule, match decisions should reflect this underlying ambiguity.

The value is simple: A scored match provides a dial for risk, not a switch.

Why probabilities win here

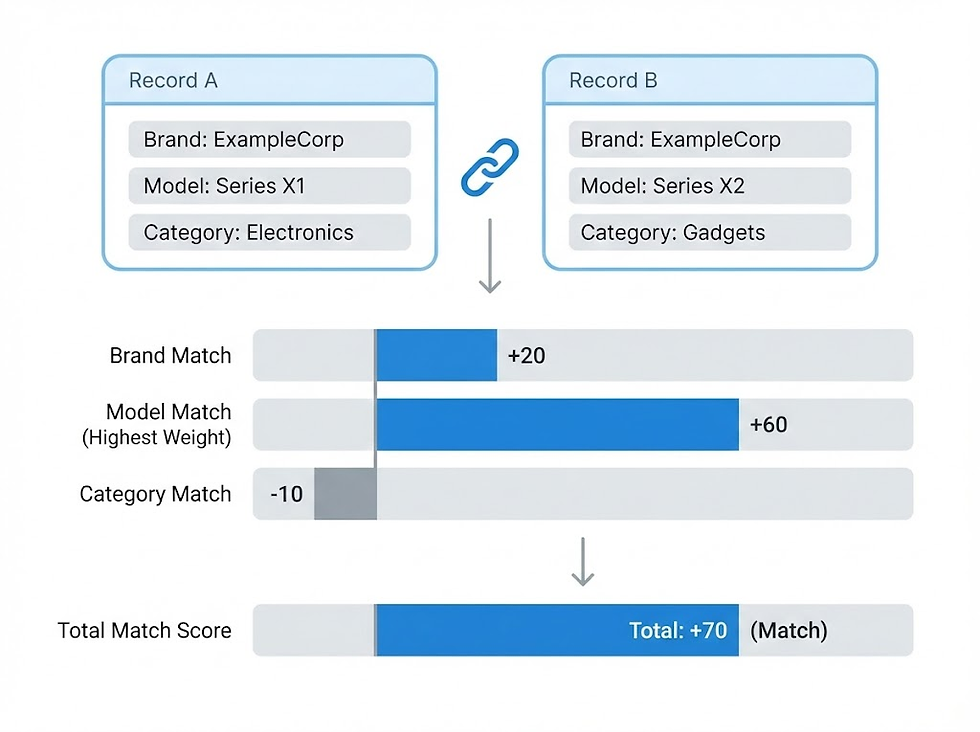

Record linkage is a decision under uncertainty: two records can agree on some fields, disagree on others, and still refer to the same person.

Probabilistic methods treat each field comparison as evidence, then combine that evidence into a single score. This score supports three distinct classifications:

Match

Non-match

Uncertain (for cases that sit in between)

This is the core difference from deterministic rules: the model can say “these look similar, but not similar enough, given how common this pattern is in the population.”

Think about three product records from three retailers:

Sony Turntable - PSLX350H

Sony PS-LX350H Belt-Drive Turntable

Sony Record Player, Model PS-LX350H, Black

Probabilistic record linkage instead computes a match score based on agreements and disagreements across fields—brand, model token, category terms, color—and then turns that into an estimated match probability. That probability becomes a control for business risk.

Matching requirements vary significantly by use case:

Marketing: High-reach scenarios might accept matches with a probability of 0.8 and above, tolerating minor errors (e.g., Sony PS-LX350H vs. PS-LX370H) to maximize a unified audience.

Credit or Healthcare: High-stakes environments may demand a 0.99 threshold, pushing borderline pairs into a manual review queue to ensure accuracy.

The same underlying model supports both decisions simply by adjusting thresholds.

Maintaining this level of coherence is nearly impossible with hard-coded rules, which require complete re-architecting for every new business requirement.

False merges and false splits impact business outcomes directly:

False Merges: Misidentifying a PS-LX350H and a PS-LX370H as the same entity corrupts pricing, attribution, and inventory counts.

False Splits: Treating the same product as several distinct SKUs fragments metrics and degrades any learning system that relies on them.

Probabilistic linkage provides a dial for that trade-off and a way to explain how it has been set.

The Fellegi–Sunter Algorithm

Fellegi–Sunter formalizes linkage by comparing record pairs and computing weights from agreement and disagreement patterns across fields.

Each comparison outcome (for example “first name exact match” or “DOB mismatch”) gets a weight based on two probabilities:

𝑚: probability of seeing this agreement pattern if the pair is a true match

u: probability of seeing this pattern if the pair is a true non‑match

Intuition: an agreement is strong evidence only when it's rare among non-matches (low u), and a disagreement is strong negative evidence only when matches usually agree on that field (high 𝑚).

For the Sony titles, exact or near‑exact agreement on PS‑LX350H has high 𝑚 and tiny u, so it contributes much more weight than brand equality on Sony, which is common even among non‑matching products.

The original Fellegi–Sunter formulation often assumes conditional independence across linkage fields, even though real-world fields (street, city, postal code) tend to correlate.

The important part: you have a traceable reason for each decision. When someone asks, “Why did we merge these product records?”, you can point to model agreement and historical 𝑚/u values, instead of a pile of ad‑hoc rules.

Parameter estimation (𝑚/u, EM)

In practice, you do not know 𝑚 and u ahead of time; they come from data.

If you have labeled pairs (for example, catalog curation or manual review of candidate matches), you can estimate 𝑚 and u for each field and comparison level directly: count how often true matches agree, and how often true non‑matches agree, then plug those into the Fellegi–Sunter weights.

When labels are limited, many implementations estimate parameters using expectation maximization (EM), which alternates between

E‑step: Given the current 𝑚/u values, compute the probability that each pair is a match based on its comparison pattern.

M‑step: Re‑estimate 𝑚 and u from these soft match assignments, treating high‑probability matches as if they were labeled.

The 𝑚/u ratio acts like a Bayes factor that updates prior odds of a match into posterior odds after observing field agreements.

Bayesian record linkage (latent entities)

Bayesian linkage goes one level deeper than pair scoring: it treats the linkage structure itself as an unknown object and estimates it jointly with model parameters.

Instead of asking, for each pair of records, “Are these the same?”, a Bayesian linkage model asks, “How many distinct products are in this dataset, and which records belong to each product?” while treating the records themselves as noisy views of these latent items.

In that view, your three PS‑LX350H titles are different noisy views of the same latent product, while Sony Turntable – PSLX370H will almost always form its own latent product, because the distortion model makes jumping from 350H to 370H extremely unlikely.”

The distinguishing benefit is uncertainty propagation: instead of producing a single linkage decision, Bayesian methods sample from the posterior distribution over linkages, which lets downstream analysis reflect linkage uncertainty. In the SMERED line of work, the model represents records as indirectly connected through latent individuals and uses MCMC moves (including split-merge style updates) to explore plausible linkage configurations.

Risk, calibration, and governance

Probabilistic linkage gives you a single match score that you can tie directly to money and risk: a Sony PS‑LX350H pair at 0.85 may be fine for marketing, while the same pair would be held to 0.99 for credit or healthcare use cases.

Calibration means that scores line up with reality—among pairs scored near 0.9, roughly nine out of ten should be true matches on a reviewed sample—so thresholds become explicit business decisions instead of guesswork.

Fellegi–Sunter helps governance because each decision can be explained in terms of field agreements, their 𝑚 / u values, and how those combine into a score for a given pair. Bayesian methods go further by exposing full posterior match probabilities, so auditors and risk teams can see not just a link, but how certain the system is about that link.

Tools and further reading

Several libraries embody these methods and are worth knowing:

Splink is a Python package for probabilistic record linkage that implements the Fellegi–Sunter model and supports EM-based parameter estimation.

Dedupe is a Python library that supports record linkage across data sources and uses an interactive labeling loop (active learning) to gather training examples efficiently.

The Python Record Linkage Toolkit (recordlinkage) provides building blocks for indexing, comparing records, and applying classifiers for linkage and deduplication.

If you’re looking to go beyond academic papers, these books provide a broader perspective:

Principles of Data Integration – Doan, Halevy, Ives: Core concepts in data integration and how record linkage fits into larger integration pipelines.

Hands‑On Entity Resolution – Michael Shearer: Practical Python‑focused guide to building and evaluating entity resolution workflows with real datasets.

Data Matching – Peter Christen: Classic reference on record linkage, comparison functions, and quality evaluation across the full linkage pipeline.

Record Linkage Techniques – 1997 (Proceedings): Proceedings volume collecting foundational papers on probabilistic and statistical record linkage from government and academic projects.

What comes next

Probabilistic linkage gives you a way to quantify identity, but it is only one layer in a modern entity resolution stack.

In the next article, the focus shifts to how you actually assemble that stack in practice to build a production workflow that your data team can run, monitor, and explain.

Series: Entity Resolution

Post 2: From exact match to HNSW

Post 3: BM25 Still Wins

Post 4: Hybrid Search and RRF

Post 5: Stop Mixing Scores. Use SPLADE

Post 6: Attention Filters SPLADE

Post 7: Heterogeneous Graph Matching

Post 8: Probabilistic Record Linkage (this post)

Comments